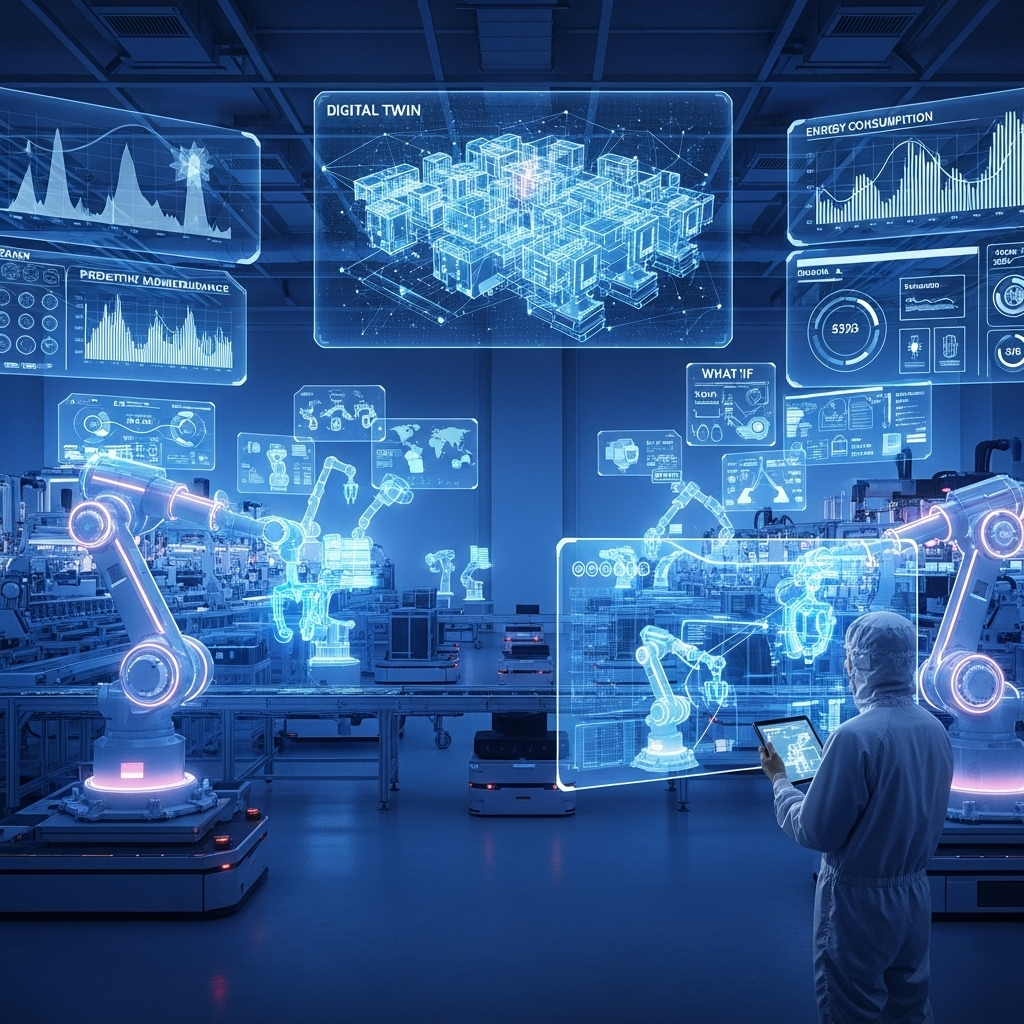

How Can Digital Twin Robotics Transform Modern Factories into Resilient, Adaptive Systems?

Executive summary

Digital twin robotics unites physical automation assets (robot arms, conveyors, PLCs, sensors) with a live, versioned virtual replica to enable safe experimentation, predictive maintenance, and continuous process optimization. This post explores the engineering architecture, data flows, algorithms, operational practices, and governance required to deliver resilient, adaptive manufacturing systems that reduce downtime, accelerate change cycles, and improve safety and product quality.

1. The value proposition

Adopting digital twins for robotics offers three primary categories of value: risk reduction (validate firmware/trajectory changes offline), operational resilience (predictive insights that reduce unplanned stoppages), and continuous improvement (what-if simulation to tune throughput and energy consumption). These benefits translate into measurable KPIs—reduced mean time to repair (MTTR), higher first-pass yield, increased OEE (Overall Equipment Effectiveness), and shorter time-to-deploy for process improvements.

2. System architecture and integration

Design a layered architecture:

- Device layer: Robot controllers, motor drivers, PLCs, field sensors, safety PLCs, vision systems, and local HMIs.

- Edge gateway: Protocol normalization (OPC-UA, Modbus, EtherCAT), deterministic buffering, time-series pre-aggregation, and local rule enforcement for safety interlocks.

- Digital twin platform: Versioned model repository (mechanics, kinematics, control logic), simulation engine (physics-based and data-driven components), event streaming, and model serving for analytics and visualization.

- Human interface: Dashboards, operator consoles, AR-assisted maintenance views, and change authorization workflows integrated with MES/ERP systems.

Ensure the architecture supports secure, low-latency telemetry streams and robust fallbacks: if connectivity to the twin is lost, local controllers must continue in a safe operational mode while buffering telemetry for later reconciliation.

3. Data model and twin fidelity

Balancing model fidelity with compute cost is central. Use a hybrid approach:

- Geometric & kinematic models: CAD-derived 3D geometry with joint limits, reachability maps, and inertia properties to validate collision-free trajectories.

- Control & dynamics: Reduced-order dynamic models for near-real-time simulation; where required, incorporate higher-fidelity FEM-based models for structural analysis offline.

- Digital sensors: Synthetic sensors can emulate edge sensors to test sensor fusion algorithms and failure modes.

Version models and maintain a model-of-record for each production cell to allow reproducible experiments and regulatory audit trails.

4. Simulation, verification and safety

Before deploying any change—trajectory, tooling, or control parameter—run staged verifications in the twin: unit tests, regression tests, and runtime safety checks. Integrate simulation-in-the-loop (SIL) and hardware-in-the-loop (HIL) for final verification. Safety-critical updates should traverse a documented gating pipeline: simulation pass → controlled pilot on offline cell → staged rollout with enhanced monitoring.

5. Analytics and machine learning

Analytics layers extract actionable signals from telemetry. Predictive maintenance uses time-series anomaly detection (e.g., spectral residuals, autoencoders) fused with domain features (torque spikes, vibration signatures, temperature trends). Process optimization leverages digital experiments and reinforcement learning or Bayesian optimization to tune cycle times and motion profiles while respecting safety constraints.

6. Human factors and operations

Adopt operator-centered design for dashboards and AR aids. Twin-driven change should be channeled through collaborative workflows: engineers propose a simulation-backed change, safety officers and operators review, and release managers approve. Training simulations enable operators to rehearse recovery procedures in realistic simulated faults.

7. Governance, security and compliance

Protect the twin and data pipelines through zero-trust network segmentation, encrypted telemetry, role-based access control, and signed model artifacts. For regulated industries, produce an auditable ledger of twin changes, validations, and deployments. Implement data retention and anonymization strategies where telemetry contains sensitive process IP.

8. Roadmap & adoption pattern

Start small with a pilot cell—preferably a non-critical pick-and-place or CNC cell—then iterate. Measure baseline KPIs, deploy the twin, and validate gain via A/B production experiments. Scale horizontally across similar cells, then vertically across lines and plants, gradually integrating MES/ERP and enterprise data lakes for cross-facility optimization.

Conclusion

Digital twin robotics is not only a simulation technology; it is an operational paradigm that tightens the feedback loop between engineering, operations, and analytics. When executed with rigorous engineering, robust governance, and operator-centered adoption, digital twins materially increase factory resilience, reduce risk, and enable continuous data-driven process improvement.