MCQ Question: What role does load balancing play in distributed systems, and how does it improve application performance and reliability?

- A. It distributes incoming traffic evenly across multiple servers, improving reliability, response time, and system scalability.

- B. It encrypts network data to ensure security between servers.

- C. It compresses large application files to reduce bandwidth usage.

- D. It merges multiple databases into one to simplify data storage.

Correct Answer: A

Explanation (extended — approx. 1000 words):

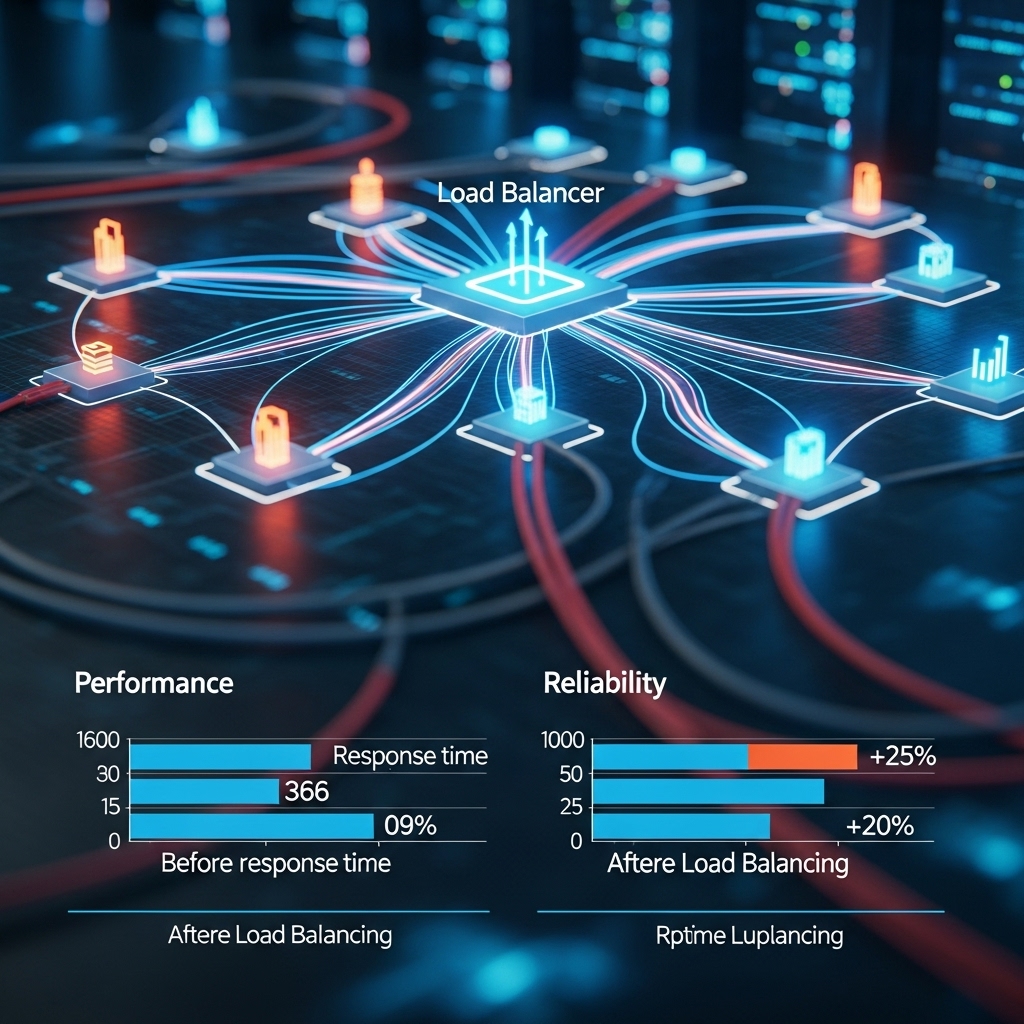

Load balancing is one of the most important concepts in modern distributed systems, cloud computing platforms, microservices architectures, and large-scale web applications. Its core function is to efficiently distribute traffic or incoming requests across multiple servers, preventing overload on any single server and ensuring high availability and optimal performance. Load balancing is a foundational element of scalability and reliability in distributed computing. Without effective load balancing, even applications that run on powerful hardware may suffer from bottlenecks, downtime, high latency, uneven resource utilization, and degraded user experience.

1. What is load balancing?

Load balancing refers to the method of distributing client requests or network traffic across a group of backend servers so that no single server becomes overwhelmed. These backend servers are often referred to as a server pool or cluster. A load balancer sits between the client and the server pool, acting as an intelligent traffic director. It receives incoming requests and forwards them to the most appropriate server based on a predefined policy or real-time performance metrics.

2. Why load balancing is essential in distributed architectures

As user demand increases, applications need to scale beyond a single server. A single machine has limitations in processing power, memory, storage, and network bandwidth. If thousands or millions of users request resources at the same time, a single server becomes a performance bottleneck. Load balancing eliminates these limitations by spreading traffic across multiple nodes, thereby improving throughput, availability, and resilience.

3. Types of load balancers

- Hardware Load Balancers: Physical devices—expensive but very fast, used in enterprise systems.

- Software Load Balancers: Applications or services like HAProxy, NGINX, Envoy, Traefik.

- Cloud Load Balancers: Managed services offered by AWS, GCP, Azure; highly scalable and cost-effective.

- DNS Load Balancers: Use DNS round-robin or geo-based routing for global traffic distribution.

4. Load balancing algorithms

The improvement in performance depends largely on the algorithm used. Common load balancing strategies include:

- Round Robin: Requests are distributed sequentially to each server.

- Weighted Round Robin: Assigns higher traffic to more powerful servers.

- Least Connections: Directs traffic to the server with the fewest active connections.

- IP Hash: Routes requests from the same client to the same server for session persistence.

- Resource-based balancing: Uses CPU, memory, load metrics for intelligent routing.

5. Key benefits of load balancing

a. Improved performance & low latency

By spreading requests across multiple nodes, each server handles fewer concurrent tasks, reducing response times. This produces faster page loads and smoother user interactions.

b. High availability & fault tolerance

If one server fails, the load balancer automatically reroutes traffic to healthy nodes. This prevents system-wide outages and improves fault tolerance. With health checks, load balancers continuously monitor server status.

c. Horizontal scalability

Load balancers support the addition of new backend servers without downtime. This elasticity enables organizations to scale quickly based on real-time demand.

d. Even resource utilization

Without load balancing, some servers may be overworked while others remain idle. Efficient distribution ensures optimal resource usage across the infrastructure.

e. Security benefits

Load balancers often hide backend server details from clients, reducing attack exposure. Some load balancers include features like DDoS mitigation, rate limiting, and WAF integration.

6. Health checks and automated failover

Modern load balancers perform periodic health checks by sending ping requests, HTTP checks, or custom probes. If a server becomes unresponsive, the load balancer removes it from rotation. When the server recovers, it is automatically added back. This reduces downtime dramatically.

7. Load balancing in microservices and container orchestration

In microservices architectures, especially those deployed on Kubernetes, load balancing is handled at multiple layers:

- Service-level load balancing: Kubernetes Service distributes traffic across Pods.

- Ingress load balancing: Controls HTTP/HTTPS routing for multiple services.

- Cluster load balancing: Managed by cloud providers using Layer 4 or Layer 7 routing.

This multi-layer approach ensures both internal and external traffic are routed efficiently.

8. Layer 4 vs Layer 7 load balancing

Layer 4 (Transport layer) load balancers operate using TCP/UDP metadata only. They are fast and efficient.

Layer 7 (Application layer) load balancers inspect HTTP headers, cookies, URLs, and payloads, enabling advanced routing like:

- routing by path (

/api/v1to one service,/imagesto another) - A/B testing

- content-based routing

- API gateway behavior

Layer 7 load balancers are highly flexible but slightly slower due to deep packet inspection.

9. Real-world examples of load balancing

- Google Search: Millions of queries per second distributed across thousands of servers.

- Netflix: Routes global streaming traffic through regional load balancers.

- AWS ALB/ELB: Automatically manages traffic for cloud applications at hyperscale.

- E-commerce: Prevents checkout failures during peak shopping periods.

10. Challenges and considerations

a. Session persistence: Some applications require that a user remain connected to the same server (sticky sessions).

b. Stateful applications: Load balancing is harder when server state is not shared; solutions include Redis session stores.

c. Distributed tracing: Traffic routing complicates debugging without tools like Jaeger or Zipkin.

d. Cost: Cloud load balancers incur usage fees; scaling must be planned.

11. Summary

Load balancing is a critical mechanism that distributes incoming traffic across multiple servers to improve performance, prevent overload, and ensure reliable operations. By offering better scalability, resilience, fault tolerance, and user experience, load balancing forms the backbone of modern distributed and cloud-native architectures. Every large-scale application — from banking systems and social networks to streaming services and SaaS platforms — relies on load balancing to handle massive volumes of global traffic efficiently.