Introduction to Cache System Architectures

The rapid growth of data-intensive applications has led to an increased demand for efficient data retrieval systems. Traditional cache systems have been widely used to improve performance by reducing the time it takes to access frequently used data. However, as the amount of data continues to grow, innovative cache system architectures are needed to revolutionize data retrieval. This article explores the latest developments in cache system architectures and their potential to transform the way we access and manage data.

Traditional Cache Systems: Limitations and Challenges

Traditional cache systems use a hierarchical approach, where data is stored in multiple levels of cache, each with varying sizes and access times. While this approach has been effective in the past, it has several limitations. For example, traditional cache systems often suffer from cache thrashing, where the cache is repeatedly filled and emptied, leading to reduced performance. Additionally, traditional cache systems are not optimized for modern workloads, which often require fast access to large amounts of data. To address these challenges, researchers and developers have been exploring new cache system architectures that can provide faster, more efficient, and more scalable data retrieval.

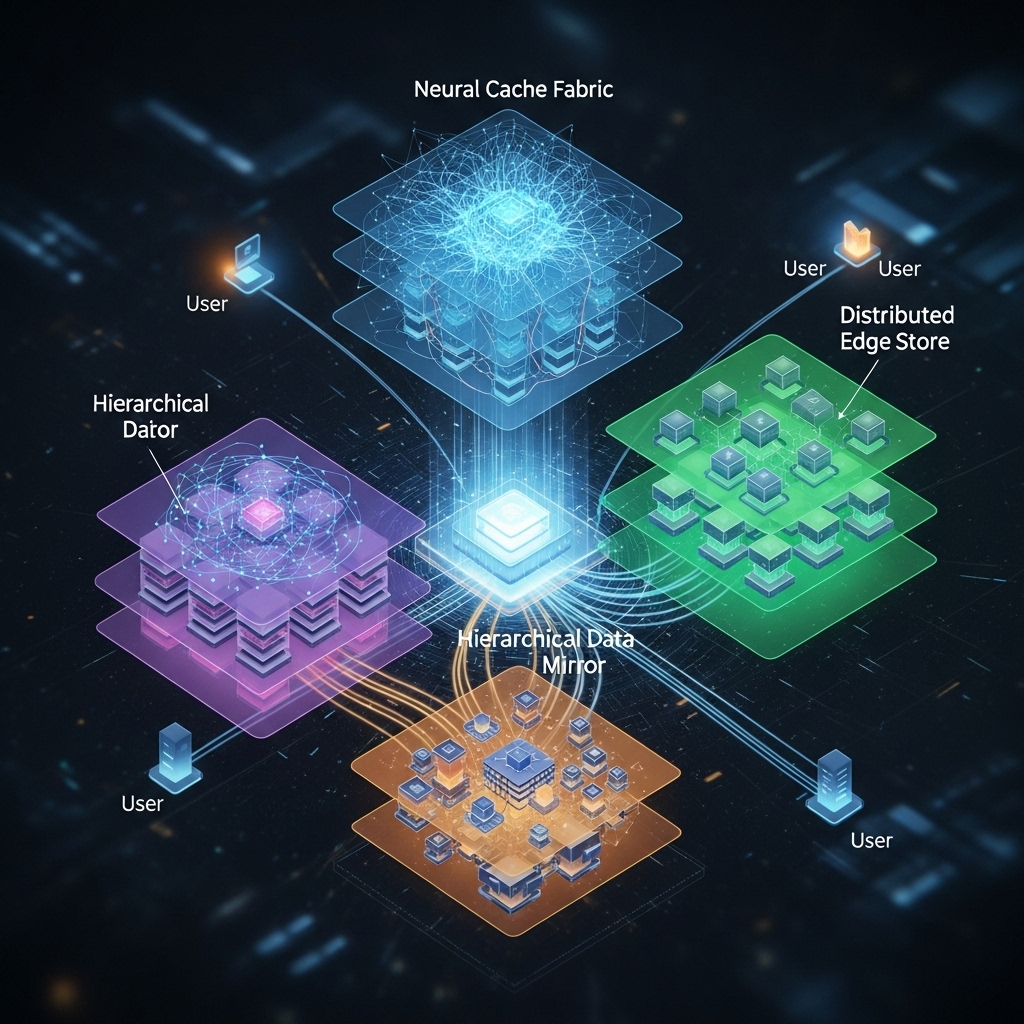

Innovative Cache System Architectures: Overview

In recent years, several innovative cache system architectures have been proposed, including hybrid cache systems, distributed cache systems, and non-volatile cache systems. Hybrid cache systems combine different types of cache, such as SRAM and DRAM, to provide a balance between performance and power consumption. Distributed cache systems, on the other hand, use a network of cache nodes to provide fast access to data across multiple locations. Non-volatile cache systems use emerging non-volatile memory technologies, such as phase change memory and spin-transfer torque magnetic recording, to provide fast and persistent storage. These innovative cache system architectures have the potential to revolutionize data retrieval by providing faster, more efficient, and more scalable access to data.

Hybrid Cache Systems: A Case Study

One example of an innovative cache system architecture is the hybrid cache system. A hybrid cache system combines different types of cache, such as SRAM and DRAM, to provide a balance between performance and power consumption. For instance, a hybrid cache system might use SRAM for frequently accessed data and DRAM for less frequently accessed data. This approach can provide significant performance improvements, as SRAM is much faster than DRAM. Additionally, hybrid cache systems can reduce power consumption, as DRAM uses less power than SRAM. A case study by researchers at the University of California, Berkeley, demonstrated the effectiveness of hybrid cache systems in improving performance and reducing power consumption. The study showed that a hybrid cache system using SRAM and DRAM can provide up to 30% improvement in performance and 25% reduction in power consumption compared to traditional cache systems.

Distributed Cache Systems: Benefits and Challenges

Distributed cache systems are another example of innovative cache system architectures. A distributed cache system uses a network of cache nodes to provide fast access to data across multiple locations. This approach can provide significant benefits, including improved performance, increased scalability, and enhanced reliability. For example, a distributed cache system can provide fast access to data across multiple data centers, reducing the latency and improving the overall performance of applications. However, distributed cache systems also pose significant challenges, including cache consistency, cache coherence, and network latency. To address these challenges, researchers and developers are exploring new protocols and algorithms for managing distributed cache systems.

Non-Volatile Cache Systems: Emerging Trends

Non-volatile cache systems are an emerging trend in cache system architectures. Non-volatile cache systems use emerging non-volatile memory technologies, such as phase change memory and spin-transfer torque magnetic recording, to provide fast and persistent storage. These technologies have the potential to revolutionize data retrieval by providing fast access to large amounts of data while reducing power consumption. For example, phase change memory can provide fast access to data while using significantly less power than traditional memory technologies. Additionally, non-volatile cache systems can provide persistent storage, reducing the need for expensive and power-hungry disk storage. Researchers and developers are actively exploring the use of non-volatile cache systems in a variety of applications, including data centers, cloud computing, and mobile devices.

Conclusion: Revolutionizing Data Retrieval

In conclusion, innovative cache system architectures have the potential to revolutionize data retrieval by providing faster, more efficient, and more scalable access to data. Hybrid cache systems, distributed cache systems, and non-volatile cache systems are just a few examples of the new approaches being explored. While these innovative cache system architectures pose significant challenges, they also offer tremendous opportunities for improving performance, reducing power consumption, and increasing scalability. As the amount of data continues to grow, it is essential to develop cache system architectures that can keep pace with the demands of modern workloads. By exploring innovative cache system architectures, researchers and developers can create faster, more efficient, and more scalable data retrieval systems that can support the growing needs of data-intensive applications.