Introduction to AWS for Large Language Models

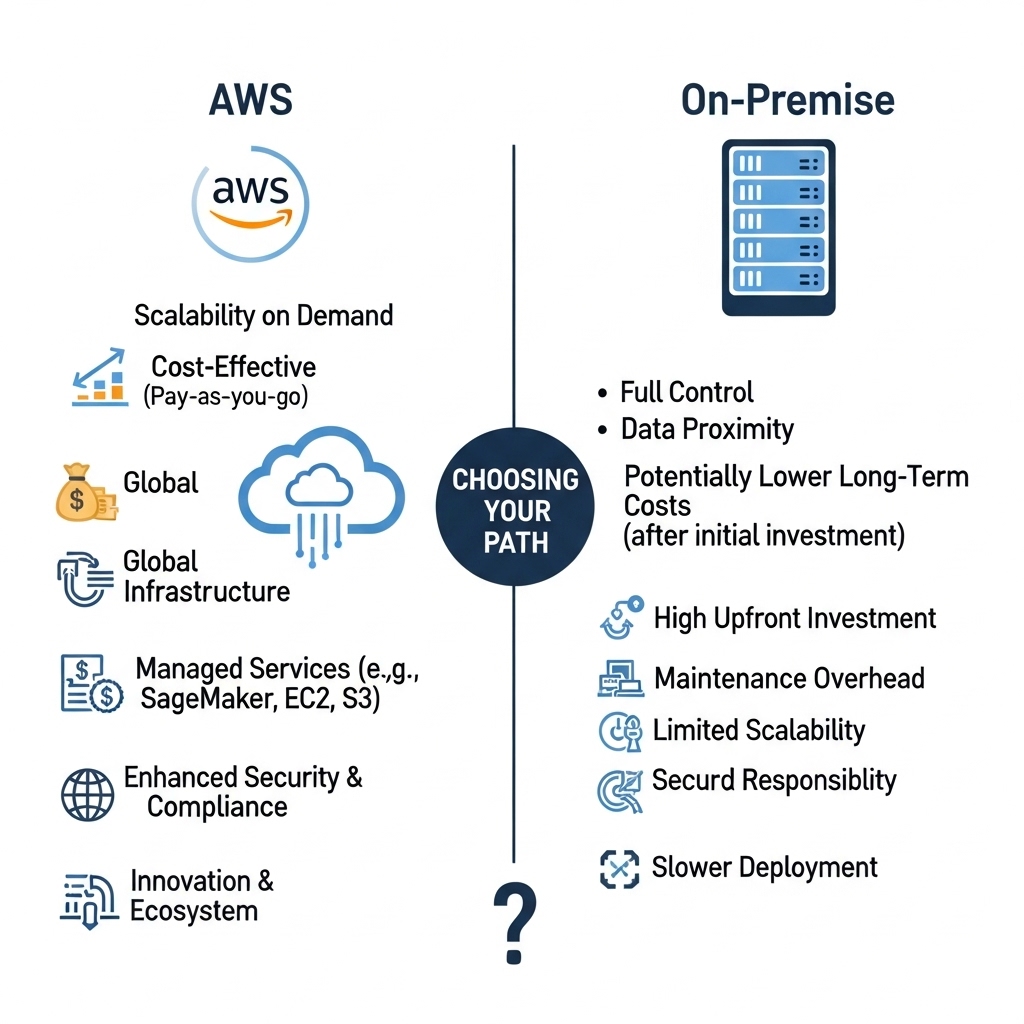

AWS (Amazon Web Services) has become a leading platform for businesses and organizations looking to leverage the power of large language models (LLMs). LLMs are a type of artificial intelligence (AI) designed to process and understand human language, and they have numerous applications in areas such as natural language processing, text generation, and language translation. In this article, we will explore the benefits of using AWS for large language models, including scalability, security, cost-effectiveness, and ease of integration.

Scalability and Performance

One of the primary benefits of using AWS for LLMs is scalability. AWS provides a range of services, including Amazon SageMaker, Amazon EC2, and Amazon S3, that can be easily scaled up or down to meet the needs of large language models. This means that businesses can quickly deploy and train LLMs without having to worry about running out of computing resources. For example, Amazon SageMaker provides a range of pre-built algorithms and frameworks for building and training LLMs, including popular models such as BERT and RoBERTa. With SageMaker, businesses can easily deploy and manage LLMs in the cloud, without having to worry about the underlying infrastructure.

Security and Compliance

Security is another key benefit of using AWS for LLMs. AWS provides a range of security features and tools, including encryption, access controls, and monitoring, that can help protect LLMs and the data they process. For example, Amazon SageMaker provides built-in support for encryption and access controls, making it easy to secure LLMs and the data they process. Additionally, AWS provides a range of compliance frameworks and tools, including HIPAA, PCI-DSS, and GDPR, that can help businesses meet regulatory requirements for LLMs. For instance, businesses in the healthcare industry can use AWS to deploy LLMs that comply with HIPAA regulations, while businesses in the financial industry can use AWS to deploy LLMs that comply with PCI-DSS regulations.

Cost-Effectiveness

AWS can also help businesses reduce the cost of deploying and managing LLMs. With AWS, businesses can pay only for the computing resources they use, rather than having to purchase and maintain expensive hardware. This can help reduce the upfront costs of deploying LLMs, as well as the ongoing costs of maintaining and updating them. For example, Amazon SageMaker provides a range of pricing options, including a free tier and a pay-as-you-go model, that can help businesses get started with LLMs without breaking the bank. Additionally, AWS provides a range of cost optimization tools and services, including AWS Cost Explorer and AWS Trusted Advisor, that can help businesses optimize their spending on LLMs and other AWS services.

Ease of Integration

AWS also makes it easy to integrate LLMs with other AWS services and applications. For example, businesses can use Amazon SageMaker to deploy LLMs that integrate with other AWS services, such as Amazon Comprehend and Amazon Translate. This can help businesses build more comprehensive and powerful AI applications, without having to worry about the underlying infrastructure. Additionally, AWS provides a range of APIs and software development kits (SDKs) that can help businesses integrate LLMs with custom applications and services. For instance, businesses can use the Amazon SageMaker SDK to integrate LLMs with custom web and mobile applications, or use the Amazon Comprehend API to integrate LLMs with other AWS services.

Use Cases and Examples

There are many use cases and examples of businesses using AWS for LLMs. For instance, a company like Netflix might use AWS to deploy LLMs that help recommend TV shows and movies to users, based on their viewing history and preferences. Another example might be a company like Amazon, which uses LLMs to power its customer service chatbots and virtual assistants. In the healthcare industry, businesses might use AWS to deploy LLMs that help analyze medical images and diagnose diseases, while in the financial industry, businesses might use AWS to deploy LLMs that help detect and prevent fraud. These are just a few examples of the many use cases and applications of LLMs on AWS.

Conclusion

In conclusion, AWS provides a range of benefits for businesses looking to deploy and manage large language models. From scalability and security to cost-effectiveness and ease of integration, AWS provides a comprehensive platform for building and deploying LLMs. With its range of services, including Amazon SageMaker, Amazon EC2, and Amazon S3, AWS makes it easy to deploy and manage LLMs, without having to worry about the underlying infrastructure. Whether you're a business looking to build more powerful AI applications, or a developer looking to get started with LLMs, AWS is a great choice. With its flexibility, scalability, and cost-effectiveness, AWS is the perfect platform for businesses and organizations looking to unlock the power of large language models.

Post a Comment